No products in the cart.

Assessing and optimizing Core Web Vitals is crucial for improving the overall user experience and search engine rankings of your website. CoreWeb Vitals are a set of specific metrics that measure the loading speed, interactivity, and visual stability of web pages. In this ultimate guide, I will walk you through the process of assessing and optimizing Core Web Vitals to ensure your website performs at its best.

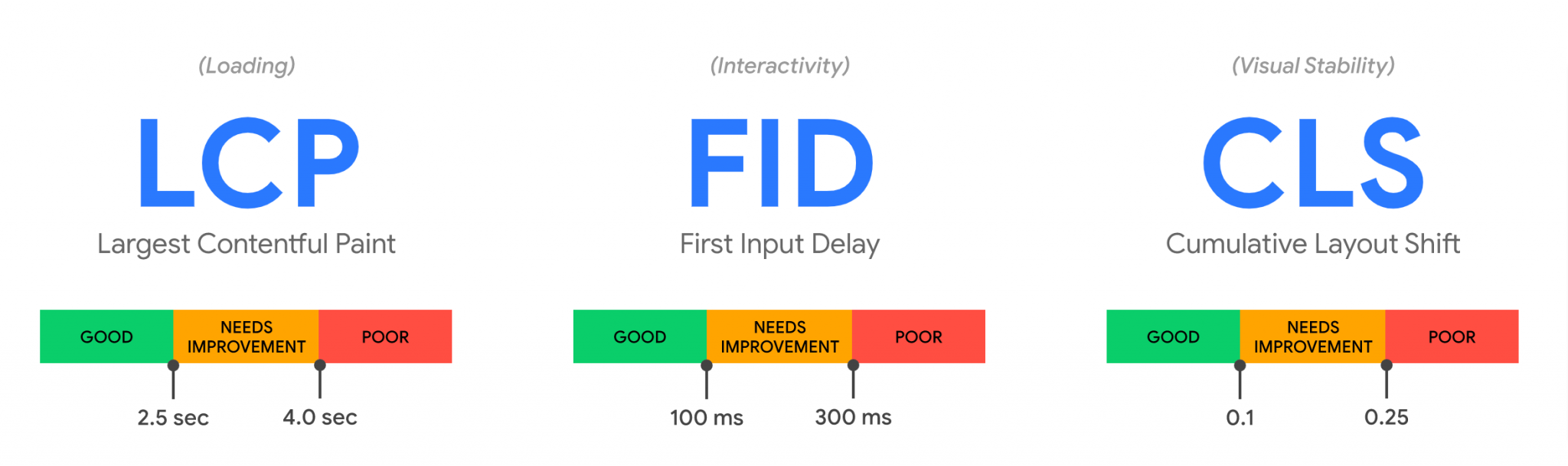

Understanding Core Web Vitals:

Largest Contentful Paint (LCP):

Measures the loading speed by identifying when the largest element in the viewport becomes visible.

First Input Delay (FID):

Measures interactivity by gauging the time it takes for the browser to respond to the user’s first interaction.

Cumulative Layout Shift (CLS):

Measures visual stability by quantifying the amount of unexpected layout shifts that occur during page loading.

Assessing Core Web Vitals:

Use Google’s PageSpeed Insights:

Enter your website’s URL into PageSpeed Insights to get a comprehensive report on your Core Web Vitals performance. It provides scores and recommendations for improvement.

Utilize Chrome DevTools:

Open Chrome DevTools, navigate to the Performance tab and perform a page load. It will provide detailed information on various performance metrics, including Core Web Vitals.

Improving Largest Contentful Paint (LCP):

Optimize server response times:

Reduce server response times by using a reliable hosting provider, optimizing database queries, and utilizing caching mechanisms.

Optimize and compress images:

Resize images to appropriate dimensions and compress them using efficient formats (e.g., WebP) to reduce file sizes.

Use lazy loading:

Implement lazy loading techniques to load images and other resources only when they become visible on the screen.

Enhancing First Input Delay (FID):

Minimize main thread work:

Reduce JavaScript execution time by removing unnecessary scripts, optimizing code, and deferring non-critical tasks.

Optimize third-party scripts:

Evaluate and replace third-party scripts that cause performance issues or introduce delays.

Implement code splitting:

Break down large JavaScript files into smaller, manageable chunks and load them only when necessary.

Mitigating Cumulative Layout Shift (CLS):

Set dimensions for elements:

Specify width and height attributes for images, videos, and other visual elements to allocate proper space in the layout.

Avoid dynamic content shifts:

Reserve space for ads and dynamically injected content to prevent layout shifts.

Load web fonts properly:

Specify font sizes and use font-display property to control how fonts are displayed during loading.

Ongoing Monitoring and Maintenance:

Continuously monitor performance:

Regularly test your website’s Core Web Vitals using tools like PageSpeed Insights and Chrome DevTools to identify any performance regressions.

Stay updated with best practices:

Keep up with the latest web development guidelines and best practices to optimize your website’s performance.

Monitor user feedback:

Pay attention to user feedback, such as bounce rates and user session durations. To gauge the actual user experience and identify areas for improvement.

By following this ultimate guide, you’ll be able to assess and optimize your website’s Core Web Vitals effectively, leading to improved user satisfaction, better search engine rankings, and ultimately, a more successful online presence.

Why user experience report may not be populated with data?

Google’s Martin Splitt explains to site owners why their Page Experience report in Search Console may not be populated with data. What it comes down to, Splitt says, is that those sites are not generating enough field data for Google to confidently deliver a report that represents what users are experiencing. To be clear, a lack of data is not an indication of any errors. The reason why other tools are able to generate reports is that they use lab data rather than field data. Splitt adds that even sites that receive a fair amount of traffic may not be generating the telemetry data Google needs to issue a report.

“Not enough field data. It can be enough visitors, if these visitors are not generating telemetry data then we are still not having the telemetry data.And even if we have some data it might not be enough for us to confidently say that this is the data we think represents the actual signal. So we might decide to actually not have a signal for that if the data source is too flaky or if the data is too noisy.… More traffic is more likely to generate data quickly but it’s not a guarantee.”

Web Hosting

Web Hosting Web Designs

Web Designs Graphic Design

Graphic Design SEO

SEO Digital Marketing

Digital Marketing